Could a future version of AirPods read your brain signals for biometric monitoring? Although it sounds far-fetched, the pieces are certainly coming together.

Contents

Related:

- Brain computer interface device from Neurolutions gets De Novo classification from FDA

- Healium’s VR/AR Biofeedback platform collaborates with Mayo Clinic

- A novel wearable that treats insomnia and anxiety receives FDA approval

There have long been rumors about Apple adding health sensors to a future version of AirPods, including mechanisms that could detect noise levels and body temperature, according to well-connected Bloomberg reporter Mark Gurman.

However, other companies are exploring in-ear wearables that set their sights on the last frontier of health and wellness technology: the brain.

Companies like Elon Musk’s Neuralink are looking into hardware that’s actually implanted into your brain, but other firms are researching much less invasive options that could, theoretically, fit into a pair of Apple AirPods.

The result could be a brain-computer interface that lets users continuously monitor their brain for health and well-being purposes. Here’s what you should know.

A wearable that monitors brain signals

In March, a lesser-known wearables manufacturer called Aware Custom Biometric Wearables announced a new study that validated one of its flagship products — something called the Ear-EEG.

The Ear-EEG is a novel device that fits into your ears like AirPods or other headphones. Instead of playing music, however, the device is meant to monitor brain activity through ambulatory recording. Technically, it performs an EEG, or electroencephalogram, which measures brain activity through electrical signals.

According to Aware, the wearable fits deep into the ear canal. This positioning places custom electrodes and sensors near the brain, the auricular branch of the vagus nerve, and major blood vessels.

The result is high-quality EEG data, real-time brain analysis, and vagus nerve stimulation.

“Aware captures an unprecedented amount of brain data, delivering personalized, AI-driven health and human performance insights into the body and brain in a device you wear in your daily life,” CEO Sam Kellett Jr. said in a press release.

As far as what the study found, it validated Aware’s claims that Ear-EEG could capture medical-grade EEG data. With machine learning, the system was also able to detect seizures in epilepsy patients.

Taking a broader view, the company says it’s excited because of “the ear’s unique capabilities.” Combining continuous biometric monitoring with AI analytics means deeper insights into our neurological health.

“Aware’s method unlocks potential across a wide range of medical applications, enhancing our understanding of neurological health and refining disease management strategies,” said Rob Matthews, CTO of Aware.

Google’s brain signal device

It isn’t just smaller companies that are exploring using wearables to read brain waves. Google off-shoot NextSense is also researching earbuds that can read the brain’s electrical signals.

More specifically, NextSense is experimenting with a device that fits into the ear canal and performs an EEG, much like Aware’s Ear-EEG. Like Aware’s focus on seizure detection in its study, Google’s subsidiary is looking at sleep and neurological conditions, Wired reported.

Also like Aware, the team found that their wearables were surprisingly accurate at detecting oncoming seizures.

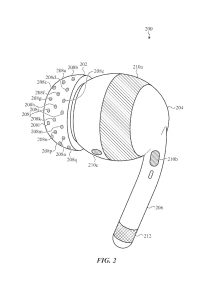

Despite the success, there are reasons to believe that this technology isn’t ready for prime time yet. For example, one of the researchers described EEGs as one of “the worst sensors in the world.”

Because of factors like body motion, environmental noise, and surface noise, EEG sensors can have a hard time parsing out useful data. More than that, packing the kind of sensors required for an EEG into an in-ear wearable is no small feat.

However, like Aware, the researchers at NextSense were pleasantly surprised by the accuracy of their results.

“I thought, OK, it shouldn’t work,” John Stivoric, one of the researchers, told Wired. “But it does work. These signals are showing up. How is this even possible?”

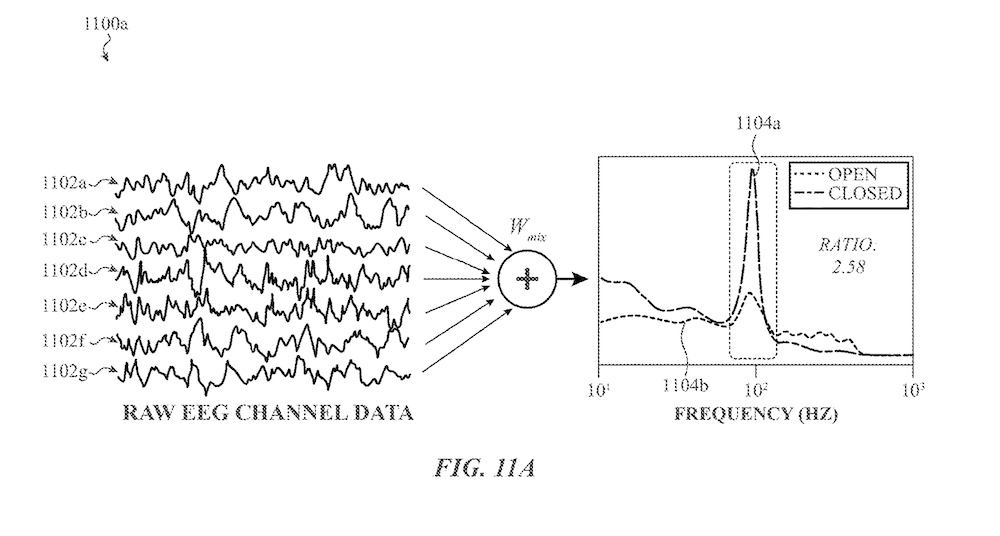

Based on the team’s research, NextSense submitted their

Could AirPods read brain signals?

Theoretically, AirPods could perform a similar EEG function to the two other wearables described in this article. Although both wearables are clunkier and larger than AirPods, it’s only a matter of time before the sensors can be shrunk down to a manageable size.

Of course, AirPods that can read the electrical activity in your brain are likely years away, but there’s reason to believe that this type of health tech is on Apple’s radar.

Apple has been exploring the brain and related technology. For example, back in 2022, Apple began hiring researchers that specialized in neuroscience and engineering, including computational neuroscientists.

If all that wasn’t clear enough, Apple in 2023 filed a patent application for an AirPods-like device that was specifically equipped with an EEG.

This patent application becomes even more interesting when Apple’s researchers describe how the same type of sensor suite could be embedded in a pair of smart glasses.

So, Apple may not only be working on AirPods that monitor brain signals, but Apple Vision Pro or similar wearables and smart glasses with the same capabilities.

What could these AirPods help with?

As far as why you’d want your AirPods to monitor your brain signals, there are a wide range of use cases. Both Aware and NextSense found that their devices were useful in detecting oncoming seizures, for example.

There are other use cases, however, such as:

- Biometric identification

- Long-term sleep monitoring

- Driver drowsiness detection

- Detection of diseases, such as inflammation or tumors.

And, of course, there’s some promising research suggesting that in-ear EEG sensors could be used to create a brain-computer interface. This type of application could, in theory, allow you to control a device with nothing but your thoughts. Or, more accurately, the electrical signals that your thoughts create.

Compared to actual implantable chips, this could be much less invasive, and likely much more affordable to a more general population.

In other words, Apple may not just be researching AirPods that read brain signals, but AirPods that use your brain signals to control your other devices.