Could a screenless wristband with a virtual display and Edge AI capability be the next-gen health monitoring device from Google?

A new patent approved this week suggests that Google might be working on a screenless wearable with edge machine learning abilities.

Contents

Related Reading:

- Top 10 Health and Fitness Apps from Google Play Store by revenue

- Verily Life Sciences receives approval for its new wearable vital signs monitor

- WearOS H MR2 update resumes for Fossil Gen 5 Watches, Check and update now

- Google’s latest wearable patent puts a new spin on smartwatch bands

Wearables and Edge AI

Edge AI means that your wearable will be able to process and interpret machine learning directly on the device.

The need for on-device data analysis arises in cases where decisions based on data processing have to be made immediately.

For example, there may not be sufficient time for data to be transferred to back-end servers, or there is no connectivity at all, and your wearable device will have to make a decision.

Complex parameters such as neurological activity or cardiac rhythms can be monitored and analyzed locally within the wearable via edge.

Another area that may benefit from edge-based data processing is “ambient intelligence” (AmI). AmI refers to edge devices that are sensitive and responsive to the presence of people.

Google’s Edge AI-based Smartband

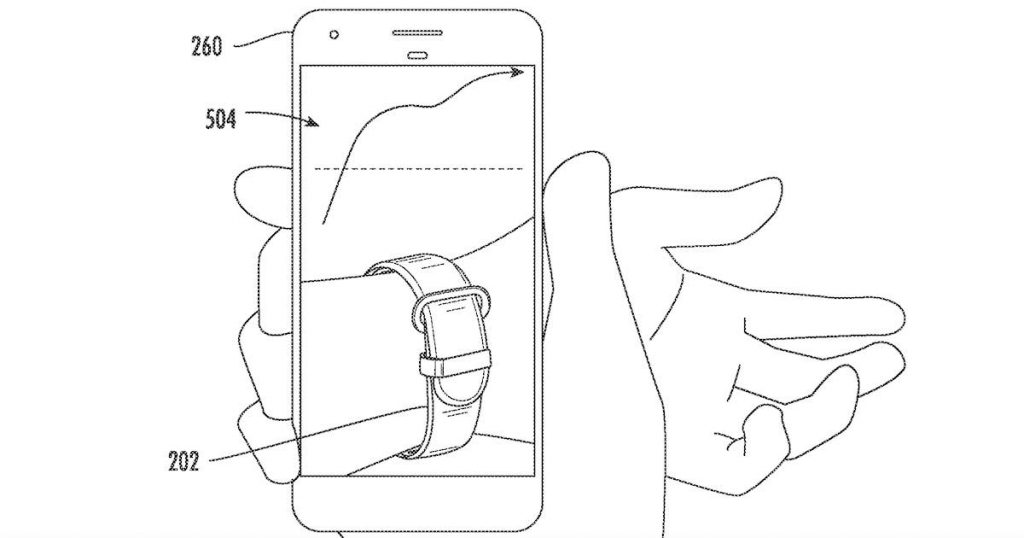

Google’s proposed smart band is a wearable device that includes one or more sensors configured to generate data associated with physiological characteristics of a user and transmit the data to a mobile device or another computer in response to detecting a proximity event associated with the wearable device and the remote computing device.

“The wristband and a corresponding smartphone app (e.g., device manager) can be configured such that, if a user brings their smartphone within a threshold distance of the wristband, the smartphone display will detect a proximity event and immediately and automatically be triggered to display information content that corresponds to the readings taken by the wristband (e.g., blood pressure, heart rate, etc.).”

Edge AI on the Google Smartband

The patent also suggests that the wristband may include one or more machine-learned models trained locally at the wearable device using sensor data generated by the wearable device.

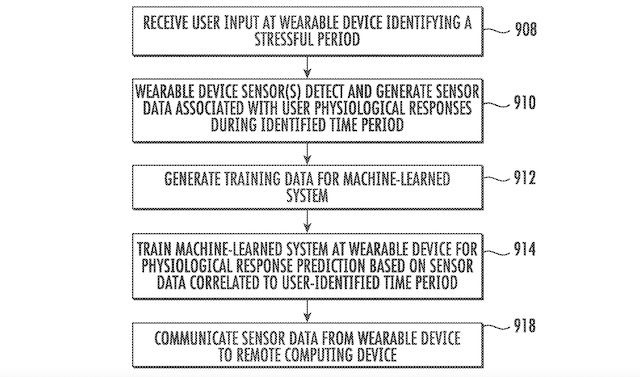

For example, a user can provide an input indicating a particular physiological response or state of the user. For instance, a user may indicate that they are stressed by providing input to the wearable device.

In response, the wearable device can log the sensor data associated with the identified time and other parameters. The sensor data can be annotated to indicate that it corresponds to a stress event.

The annotated sensor data can generate training data that is used to train the machine-learned model right on the wearable device.

One or more machine-learned models may generate a prediction such as a predicted physiological response. A user can provide user confirmation input to confirm that the physiological response prediction was correct or to indicate that the physiological response prediction was incorrect.

The user confirmation input and sensor data can generate training data that is further used to train one or more machine-learned models.

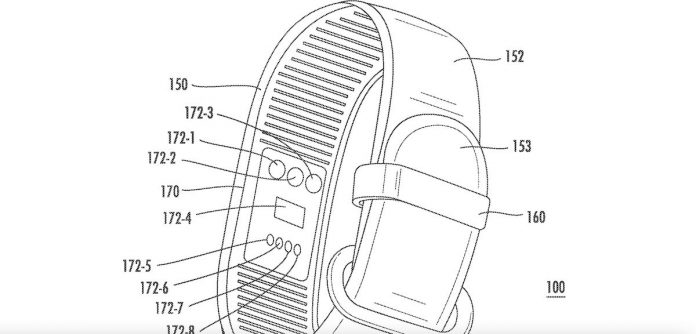

Proposed Sensors on the Google smart wristband

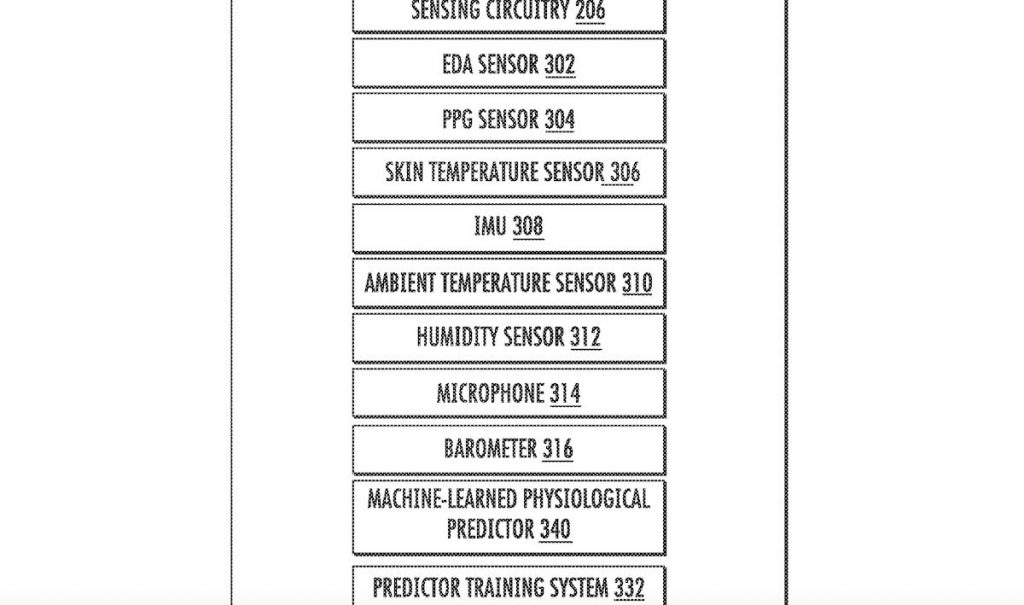

The sensor system on the wristband can include an electrodermal activity sensor (EDA), a photoplethysmogram (PPG) sensor, a skin temperature sensor, and/or an inertial measurement unit (IMU), according to the patent.

Additionally or a sensor system can include an electrocardiogram (ECG) sensor, an ambient temperature sensor (ATS), a humidity sensor, a sound sensor such as a microphone (e.g., ultrasonic), an ambient light sensor (ALS), and a barometric pressure sensor (e.g., barometer).

The smart wristband may provide visual, audio, and/or haptic responses to remind a user of the beneficial information on managing stress provided by the remote computing device.

User input will be collected directly from the band as well. For example, if the user experiences a stressful time period, the user can confirm the physiological response prediction by providing a first input (e.g., by applying pressure) to the band of the wearable device.

Since the sensors of a smart wristband will generate sensor data indicative of a user’s heart rate, EDA, and/or blood pressure, among other physiological characteristics, the smart wristband can associate the sensor data with the period of stress identified by the user input to the wearable device.

Although there are many display-less smart bands out there that help with activity tracking and health monitoring, such as WHOOP, Google’s ability to synthesize sensor information and user input using Edge AI on the band could lead to some very interesting health monitoring use cases.

Amazon is the other company with a display-less smart band called Halo with some advanced capabilities to process voice processing to detect emotions. (Tone Analysis).

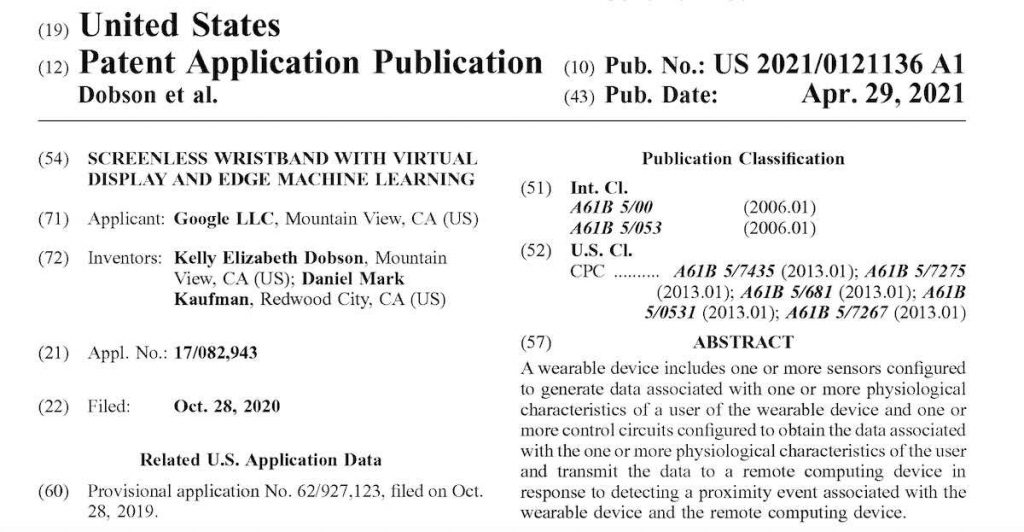

This Google patent was originally filed in Oct’2020 and published this week (April 29th, 2021). The inventors are Kelly Elizabeth Dobson and Daniel Mark Kaufman from Google.

Dan works as the Head of Advanced Technology and Product and is also the Head of Privacy and Security for Hardware at Google, and Kelly Dobson is a leading researcher at the MIT labs.